If you find this article bringing you new insight, please consider joining Patreon to show your support!

Foreword: Beyond Javascript Development

Before I have started React/Vue/Angular development, I have been working on building iOS and Android mobile applications for a few years that use MVVM architecture.

When developing a frontend application, we undoubtedly offload all kinds of I/O, computational tasks from a UI thread to prevent a UI thread from being too busy and becoming unresponsive.

This rule doesn't apply to current web development.

The current web development ONLY offloads the tasks to web worker when the application encounter performance issues but NOT by the tasks' nature.

As we all know, web workers are designed to offload works from a UI thread, but why don't developers utilize it by default?

Painful and Time-consuming Web Worker Development

I cannot deny that by the javascript execution design (single-main-thread event-loop architecture), you can't have shared memories across threads(workers).

That's why web workers are designed in a message-driven way. All the communications are required to send message copies. For example:

// main.js

const first = document.querySelector('#number1');

const second = document.querySelector('#number2');

const result = document.querySelector('.result');

if (window.Worker) {

const myWorker = new Worker("worker.js");

first.onchange = function() {

myWorker.postMessage([first.value, second.value]);

console.log('Message posted to worker');

}

second.onchange = function() {

myWorker.postMessage([first.value, second.value]);

console.log('Message posted to worker');

}

myWorker.onmessage = function(e) {

result.textContent = e.data;

console.log('Message received from worker');

}

} else {

console.log('Your browser doesn\'t support web workers.');

}// worker.js

onmessage = function(e) {

console.log('Worker: Message received from main script');

const result = e.data[0] * e.data[1];

if (isNaN(result)) {

postMessage('Please write two numbers');

} else {

const workerResult = 'Result: ' + result;

console.log('Worker: Posting message back to main script');

postMessage(workerResult);

}

}It makes parallel processing difficult and requires a deep understanding of workers to do it right.

Here are the pain points that javascript application developers might encounter:

- creating a web worker and importing existing npm modules into a web worker is painful.

- Coding in a weakly-typed language with a loosely message-driven style is not intuitive, time-consuming, more difficult to debug, and repetitive.

- Working in an async loading UI pattern requires extra UI states to handle it.

Because of the learning curve of web workers, we are tempted to do everything on UI thread like:

- calling fragmented JSON RPC / API calls and then aggregate into one view model

- calling a large GraphQL query and then applying a lot of data transformations

- sorting, filtering, and reducing different kinds of data triggered by UI actions

In 2020, web technology advancement has brought the way out of this pain.

Web Technology Advancement

A Comlink RPC abstraction abstracts message-driven web workers into a promise-like RPC call. It works with webpack and magically import npm modules into the web workers.

React 17.0 will expose <Suspense> component (Experimental Concurrent Mode Feature) helps boost developer productivity on handling asynchronous data without sacrificing the power of declarative programming style.

From my deep heart, I believe it is suitable for every React developer to discover those techniques, try to find out, and apply forward-suspense-compatible solutions into their new/existing code bases.

Creating a React Application w/ Experimental Features

First, I studied the React 17.0 experimental <Suspense> contract and researched the latest Web Worker abstraction.

Combined with the power of Comlink RPC abstraction that abstracts message-driven web workers into a promise-like RPC call.

I created react-suspendable-contract that is a high-level and experimental abstraction for any promise-like to fulfill the contract of <Suspense>.

import { DependencyList, ReactElement, useMemo } from 'react'

const PENDING = Symbol('suspense.pending')

const SUCCESS = Symbol('suspense.success')

const ERROR = Symbol('suspense.error')

type RenderCallback<T> = (data: T) => ReactElement<any, any> | null

export interface SuspendableProps<T> {

data: () => T

children: RenderCallback<T>

}

/**

* Simple Wrapper indicate the component is suspendable.

* `useSuspendableData` will create the contract that consumed by <Suspense> Component

*

* ```js

* const UserPage = ({ userId }) => {

* const suspendableData = useSuspendableData(() => getUserAsync({ id: userId }), [userId])

*

* return (

* <Suspense fallback={<Loading />}>

* <Suspendable data={suspendableData}>

* {data => <UserProfile user={data}/>}

* </Suspendable>

* </Suspense>

* )

* }

* ```

*

* @param data

* @param children

* @constructor

*/

export const Suspendable = <T>({ data, children }: SuspendableProps<T>) => {

return children(data())

}

/**

* `useSuspendableData` will only execute the promiseProvider when one of the `deps` has changed.

*

* Notes: internally use `useMemo` keep track the `deps` changes

*

* @param promiseProvider

* @param deps

*/

export function useSuspendableData<T>(

promiseProvider: () => PromiseLike<T>,

deps: DependencyList | undefined,

): () => T {

if (typeof promiseProvider !== 'function') {

throw Error('promiseProvider is not a function')

}

return useMemo(() => {

let status = PENDING

let error: any

let result: T

const suspender = Promise.resolve()

.then(() => promiseProvider())

.then(r => {

status = SUCCESS

result = r

})

.catch(err => {

status = ERROR

error = err

})

return () => {

switch (status) {

case PENDING:

throw suspender

case ERROR:

throw error

case SUCCESS:

return result

default:

throw Error('internal error. This should not happen')

}

}

}, deps)

}Then, I created a sample React application with 300 items in a popular virtual list library react-virtualized . The application calculates a simple math function when the items become visible during the user's scroll.

I added a compute function that would burn some CPUs.

// Simply write the same computational function

// Comlink will do the magic to pack this function into a web worker

// noting special, just a compute task that could use CPU

export function compute(base: number, pow: number): number {

let result = 0

let i = 0

const len = Math.pow(base, pow)

while (i < len) {

result += Math.sin(i) * Math.sin(i) + Math.cos(i) * Math.cos(i)

i++

}

return result

}I implemented four scenarios to process a virtual list calculation when the item becomes visible:

- UI Thread (Blocking)

- Web Worker (Singleton)

- Web Worker (Pool)

- Web Worker (Dedicated Worker)

Let's have a quick look at the final research deliverables.

Live Demo

A video recording to show a web-worker-powered web application that can use all the 16 threads of an 8 core CPU.

In 2020, If someone explains the laggy of a Web Application because of a single-threaded limitation, they don't know Web.

— LoGap (@gaplo917) September 20, 2020

A correctly implemented Web Application can use ALL your CPU cores to process your business logic.

Live Demo: https://t.co/PpyfUbzKyJ #React #Javascript pic.twitter.com/Ofh4rBbe1U

Try the following iframe, or Full Page Live Demo to scroll in the "Virtual List with 300 Items", and change the "Mode" to see the different effects!

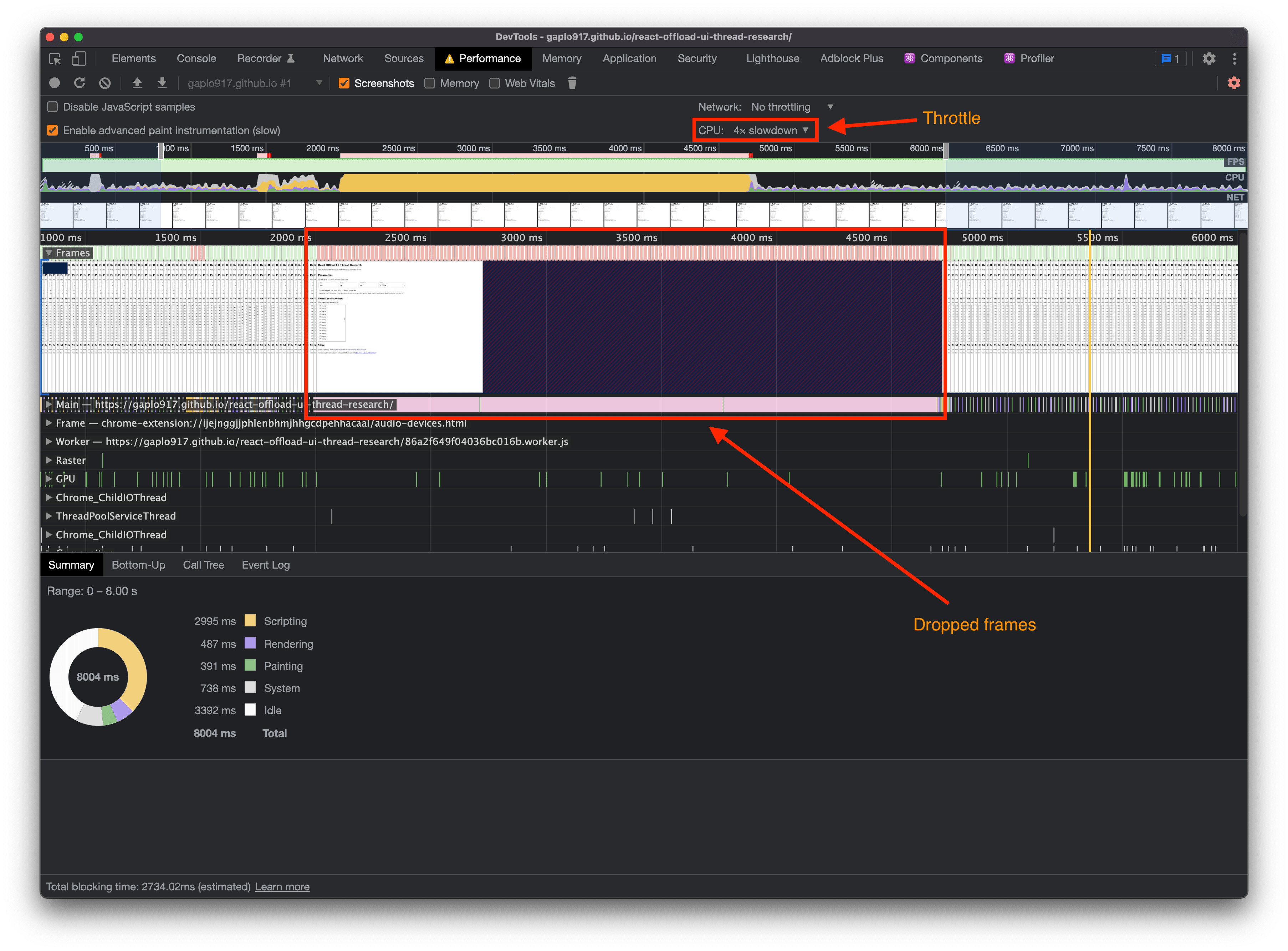

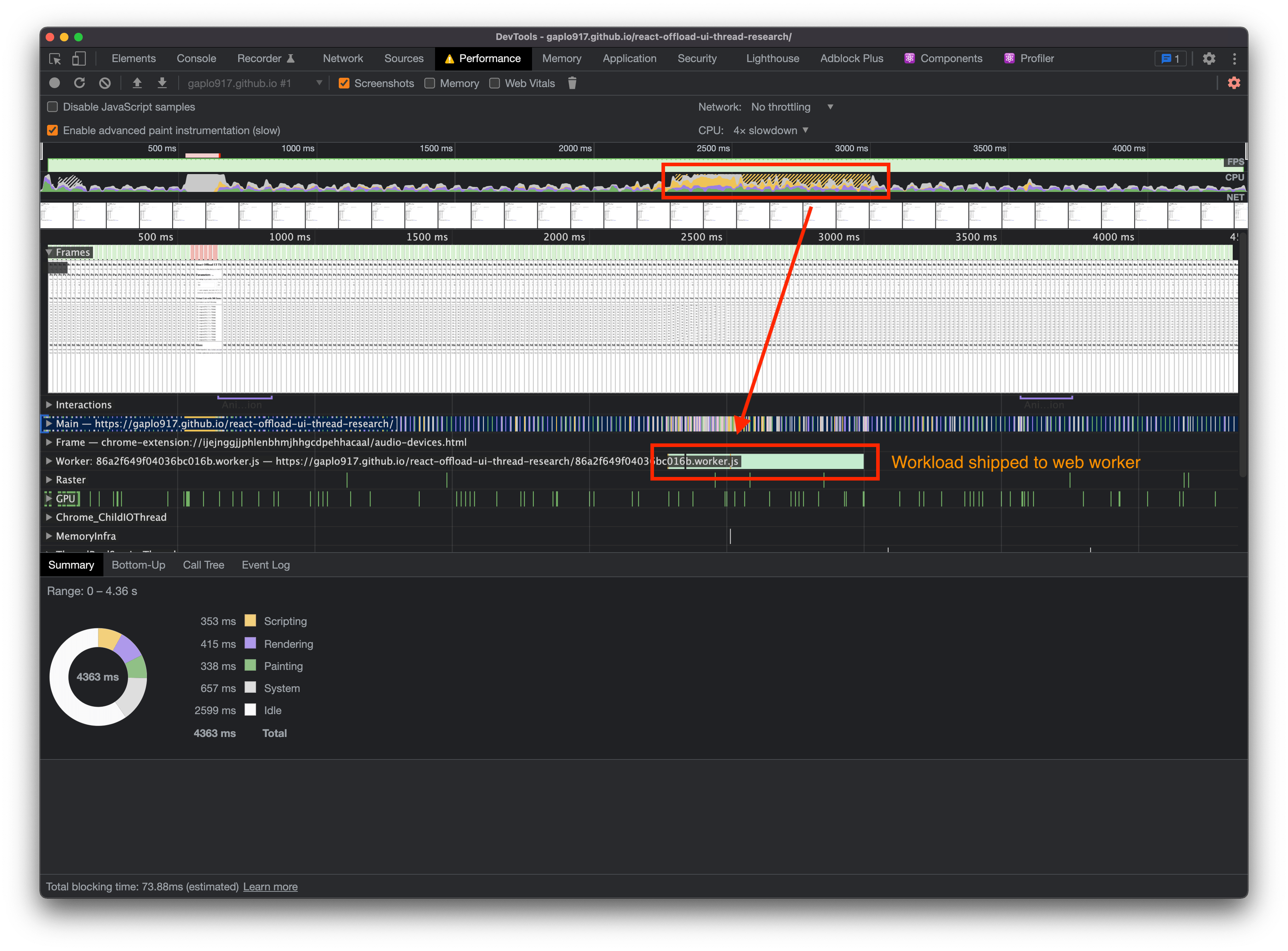

Someone might claim that:

My application reaches 60 fps most of the time in my iPhone 12 Pro Max / i9 Macbook pro...

Try to run the demo in the "CPU 4x slowdown" option under "Performance."

Hey! Do you know all the previous demos and screenshots only talk about 60 fps...

120Hz is coming

Since the iPad Pro 2nd-gen started to support 120Hz, I believed 120Hz Web browsing is coming soon. The higher fps, the shorter time for a UI thread to process.

Considering the 60Hz to 120Hz change, the "smooth UI (No dropped frames)" process cycle time changed from 16.67ms to 8.33ms, which halved the time we got from the previous decade!

An I/O call is non-blocking on a UI thread doesn't mean that it doesn't use the UI thread CPU time.

A non-blocking HTTP I/O call doesn't block the UI thread immediately, but it does consume the UI thread's CPU time. If the UI thread doesn't have sufficient CPU time to process the UI, it would eventually drop the frames.

For instance, when the applications make high-concurrency I/O calls, these processes cumulatively eat up the UI thread's CPU time at the same moment and it would easily cause the drop frame. What's more, developers tend to process the business requirements after I/O calls such as data transformation, data validations, and UI state transformation, which are blocking operations.

If the business requirements become more and more complex, using web workers is unavoidable if you need to build a hassle-free, smooth 120Hz Web application.

A scientific approach is to develop your application in "CPU 4x slowdown" and target to remain 60 fps, it proves your application can smoothly complete the workload on a UI thread on low-end devices, and there should be no significant lag on high-end 120Hz browsing.

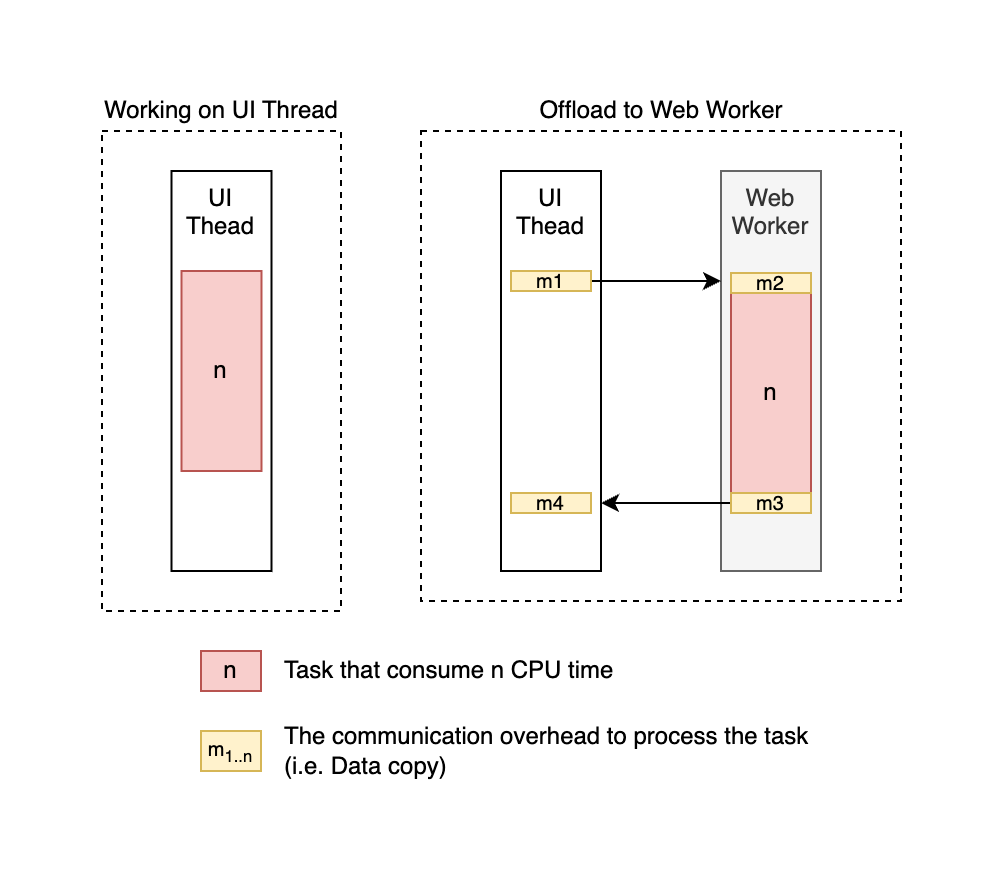

Understanding the Trade-off

Assume we spend n CPU time to process a task on UI thread, if we offload the task to web workers would need to spend n + m CPU time where m is the overhead. Here are the illustrations:

In a micro view, we traded off using more total CPU time to process the same task in parallel for saving the UI thread's CPU time.

But in a macro view, if the users did unnecessary and repetitive actions because of junking UI such as refreshing the whole page, it would easily take 100 - 1000x more CPU time than the overhead.

Ifmis much smaller thann, it is worth reserving the UI thread CPU time for future complex changes.

Conclusion

The ComLink abstraction(turn a web worker to RPC-style function call) and React Concurrent mode arise.

I think it is time to start thinking about adopting web workers in all cases - completely decouple a data accessing layer. Using the browser's web worker in the background thread for all I/O and data processing by nature and then return to the UI thread for rendering.

Nothing is new; this is how we write a standard smooth frontend application in other platforms(iOS, Android, Windows, macOS, JVM) since multi-threaded CPU arise.

GitHub Source: https://github.com/gaplo917/react-offload-ui-thread-research

Appendix

UI Thread (Blocking)

The one who is already busy on UI still needs to work on the calculation task.

// file:SomeListBlocking.tsx

function TabContent({ index, base, pow, style, isScrolling }: TabContentProps) {

// if user is scrolling and the content is not yet ready, showing loading component

const result = isScrolling ? (

<Loading index={index} />

) : (

useMemo(() => {

const result = compute(base, pow)

return (

<ComputeResult index={index} base={base} pow={pow} result={result} />

)

}, [base, pow])

)

return <p style={{ padding: 8, ...style }}>{result}</p>

}

export default function SomeListBlocking() {

return (

<VirtualList

rowRendererProvider={(base, pow) => ({

key,

index,

style,

isScrolling,

}) => (

<TabContent

key={key}

index={index}

base={base}

pow={pow}

style={style}

isScrolling={isScrolling}

/>

)}

/>

)

}Web Worker (Singleton)

An extra worker to do all the computations. It is the easiest way to integrate Comlink correctly and 99.99% guarantees performance improvement.

// file:SomeListSingleton.tsx

// the actual web worker function from comlink

import { compute } from '../../workers/compute.worker.singleton'

function TabContent({ index, base, pow, style }: TabContentProps) {

// create a future data that force a promise to fulfill Suspense Component contract

const suspendableData = useSuspendableData(() => compute(base, pow), [

base,

pow,

])

return (

<p style={{ padding: 8, ...style }}>

<ErrorBoundary

key={`${base}-${pow}`}

fallback={<ComputeErrorMessage index={index} base={base} pow={pow} />}

>

<React.Suspense fallback={<Loading index={index} />}>

<Suspendable data={suspendableData}>

{(data) => (

<ComputeResult

index={index}

base={base}

pow={pow}

result={data}

/>

)}

</Suspendable>

</React.Suspense>

</ErrorBoundary>

</p>

)

}

export default function SomeListSingleton() {

return (

<VirtualList

rowRendererProvider={(base, pow) => ({ key, index, style }) => (

<TabContent

key={key}

index={index}

base={base}

pow={pow}

style={style}

/>

)}

/>

)

}Web Worker (Pool)

When you have a lot of computations, you might need a worker pool and distribute the jobs to the workers in parallel to speed up the calculations.

// file:SomeListWorkerPool.tsx

// the actual web worker from comlink

import ComputeWorker from 'comlink-loader!../../workers/compute.worker'

function TabContent({ index, base, pow, style, worker }: TabContentProps) {

const suspendableData = useSuspendableData<number>(

() => worker.compute(base, pow),

[base, pow],

)

return (

<p style={{ padding: 8, ...style }}>

<ErrorBoundary

key={`${base}-${pow}`}

fallback={<ComputeErrorMessage index={index} base={base} pow={pow} />}

>

<React.Suspense fallback={<Loading index={index} />}>

<Suspendable data={suspendableData}>

{(data) => (

<ComputeResult

index={index}

base={base}

pow={pow}

result={data}

/>

)}

</Suspendable>

</React.Suspense>

</ErrorBoundary>

</p>

)

}

export default function SomeListWorkerPool() {

const [poolSize, setPoolSize] = useState(4)

// create a pool of workers

const workerPool = useMemo(

() => new Array(poolSize).fill(null).map(() => new ComputeWorker()),

[poolSize],

)

return (

<>

<VirtualList

headerComp={() => (

<TextField

id="standard-basic"

label="Worker Pool Size"

required

type="number"

variant="outlined"

defaultValue={poolSize}

inputProps={{ step: 1 }}

onChange={(event: React.ChangeEvent<{ value: string }>) => {

setPoolSize(Number(event.target.value))

}}

/>

)}

rowRendererProvider={(base, pow) => ({ key, index, style }) => (

<TabContent

key={key}

index={index}

base={base}

pow={pow}

style={style}

worker={workerPool[index % poolSize] /* distribute workload */}

/>

)}

/>

</>

)

}Web Worker (Dedicated Worker)

Ideal world, when you have unlimited resources, you could dedicate a new task to a new worker. In the real world, it is not efficient.

// file:SomeListDedicatedWorker.tsx

// the actual web worker from comlink

import ComputeWorker from 'comlink-loader!../../workers/compute.worker'

// no way to dispose the worker if using a comlink-loader, until this pr is merged

// https://github.com/GoogleChromeLabs/comlink-loader/pull/27

function TabContent({ index, base, pow, style }: TabContentProps) {

const suspendableData = useSuspendableData<number>(async () => {

// create a dedicated worker for each rendering

const worker = new ComputeWorker()

return worker.compute(base, pow)

}, [base, pow])

return (

<p style={{ padding: 8, ...style }}>

<ErrorBoundary

key={`${base}-${pow}`}

fallback={<ComputeErrorMessage index={index} base={base} pow={pow} />}

>

<React.Suspense fallback={<Loading index={index} />}>

<Suspendable data={suspendableData}>

{(data) => (

<ComputeResult

index={index}

base={base}

pow={pow}

result={data}

/>

)}

</Suspendable>

</React.Suspense>

</ErrorBoundary>

</p>

)

}

export default function SomeListDedicatedWorker() {

return (

<VirtualList

rowRendererProvider={(base, pow) => ({ key, index, style }) => (

<TabContent

key={key}

index={index}

base={base}

pow={pow}

style={style}

/>

)}

/>

)

}Patreon Backers

Thank you for being so supportive ❤️❤️❤️. Your support made this R&D article always free, open, and accessible. It also allows me to focus on my writings that bring a positive impact to you and the other readers among the global technical community. Become a Patreon Backer!

Backers:

-

- Anthony Siu (Gold)

- Martin Ho (Gold)

- Chan Paul (Gold)

- Jay Chau (Gold)

- Mat (Gold)

- Raymond An

- Chi Hang Chan